Data lake design patterns on AWS (Amazon) cloud

Srinivasa Rao • May 8, 2020

Build scalable data lakes on Amazon cloud (AWS)

Unlike the traditional data warehousing, complex data lake often involves combination of multiple technologies. It is very important to understand those technologies and also learn how to integrate them effectively. This blog walks through different patterns for successful implementation any data lake on Amazon cloud platform.

Pattern I: Full Data lake stack

Pattern II: Unified Batch and Streaming model

Pattern III: Lambda streaming architecture

When we are building any scalable and high performing data lakes on cloud or on-premise, there are two broader groups of toolset and processes play critical role. One kind of toolset involves in building data pipelines and storing the data. The another set of toolset or processes does not involve directly in the data lake design and development but plays very critical role in the success of any data lake implementation like data governance and data operations.

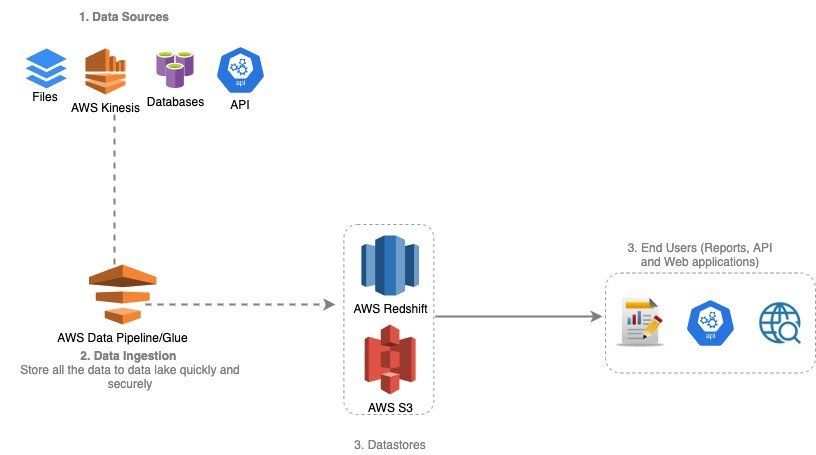

The above diagrams show how different Amazon managed services can be used and integrated to make it full blown and scalable data lake. You may add and remove certain tools based on the use cases, but the data lake implementation mainly moves around these concepts.

Here is the brief description about each component in the above diagrams.

1. Data Sources

The data can come from multiple desperate data sources and data lake should be able to handle all the incoming data. The following are the some of the sources:

• OLTP systems like Oracle, SQL Server, MySQL or any RDBMS.

• Various File formats like CSV, JSON, AVRO, XML, Binary and so on.

• Text based and IOT Streaming data

2. Data Ingestion

Collecting and processing the incoming data from various data sources is the critical part of any successful data lake implementation. This is actually most time consuming and resource intensive step.

AWS has various highly scalable managed services to develop and implement very complicated data pipelines of any scale.

AWS Data Pipeline

AWS Data Pipeline is Amazon fully managed service where you can build unified batch and streaming data pipelines. It also provides horizontal scaling and tightly integrated with other Big Data components like Amazon Redshift, Amazon Dynamo, Amazon S3 and Amazon EMR

AWS Glue

AWS Glue is a fully managed ETL service which enables engineers to build the data pipelines for analytics very fast using its management console. You can build data pipelines using its graphical user interface (GUI) with few clicks. It automatically discovers the data and also catalog the data using AWS Glue catalog service.

AWS EMR

AWS EMR is a managed amazon cloud service for Hadoop/Spark echo system. You can use AWS EMR for various purposes:

• To build data pipelines using spark, especially when you have lot of code written in Spark when migrating from the on-premise.

• To do Lift and Shift existing Hadoop environment from onsite to cloud.

• If you want to use Hive and HBase databases part of your use cases.

• To build Machine learning and AI pipelines using Spark.

AWS EMR clusters can be built on on-demand and also can be auto scaled depending on the need. You can also use spot instances where you don’t need production scale SLAs, which costs lot less compare to using regular instances.

3. Raw data layer

Object storage is central to any data lake implementation. AWS S3 serves as raw layer. You can build highly scalable and highly available data lake raw layer using AWS S3 which also provides very high SLAs.

It also comes with various storage classes like S3 Standard, S3 Intelligent-Tiering, S3 Standard-IA, S3 One Zone-IA and S3 Glacier, which are used for various use cases and to meet different SLAs.

4. Consumption layer

All the items mentioned before are internal to data lake and will not be exposed for external user. Consumption layer is where you store curated and processed data for end user consumption. The end user applications can be reports, web applications, data extracts or APIs.

The following is some of the criteria while choosing database for the consumption layer:

• Volume of the data.

• Kind of the data retrieval patterns like whether applications use analytical type of queries like using aggregations and computations or retrieves just based on some filtering.

• How the data ingestion happens whether it’s in large batches or high throughput writes (IOT or Streaming) and so on.

• Whether the data is structured, semi-structured, quasi-structured or unstructured.

• SLAs.

AWS Dynamo

Amazon Dynamo is a distributed wide column NoSQL database can be used by application where it needs consistent and millisecond latency at any scale. It is fully managed and can be used for document and wide column data models. It also supports flexible schema and can be used for web, ecommerce, streaming, gaming and IOT use cases.

Amazon Redshift

Amazon Redshift is a fast, fully managed analytical data warehouse database service scales over petabytes of data. Amazon Redshift provides a standard SQL interface that lets organizations use existing business intelligence and reporting tools. Amazon Redshift is a columnar database and distributed over multiple nodes allows to process requests parallel on multiple nodes.

Amazon DocumentDB

Amazon DocumentDB is a fully managed document-oriented database service which supports JSON data workloads. It is MongoDB compatible. Its fast, high available and scales over huge amounts of data.

5. Machine Learning and Data Science

Machine Learning and Data science teams are biggest consumers of the data lake data. They use this data to train their models, forecast and use the trained models to apply for future data variables.

Amazon Glue

Please refer above

Amazon EMR

Please refer above

Amazon SageMaker

Amazon SageMaker can be used to quickly build, train and deploy machine learning models at scale; or build custom models with support for all the popular open-source frameworks.

Amazon ML and AI

Amazon has huge set of robust and scalable Artificial Intelligence and Machine Learning tools. It also provides pre-trained AI services for computer vision, language, recommendations, and forecasting.

a. Data Governance

Data Governance on cloud is a vast subject. It involves lot of things like security and IAM, Data cataloging, data discovery, data Lineage and auditing.

Amazon Glue Catalog

Amazon Glue Catalog is a fully managed metadata management service which can be fully integrated with other components like Data Pipelines, Amazon S3 and so on. You can quickly discover, understand and manage the data stored in your data lake.

You can view my blog for detailed information on data catalog.

Amazon CloudSearch

Cloud search is a kind of enterprise search tool that will allow you quickly, easily, and securely find information.

AWS IAM

Please refer to my data governance blog for more details.

AWS KMS

AWS KMS is a hosted KMS that lets us manage encryption keys in the cloud. We can create/generate, rotate, use, and destroy AES256 encryption keys just like we would in our on-premises environments. We can also use the cloud KMS REST API to encrypt and decrypt data.

b. Data Operations

Operations, Monitoring and Support is key part of any data lake implementations. AWS provides various tools to accomplish this.

AWS CloudTrail

AWS offers CloudTrail, a comprehensive set of services for collecting data on the state of applications and infrastructure.

Please refer to my blog cloud operations for full details.

AWS CloudWatch

AWS CloudWatch Logs maintains three audit logs for each AWS project, folder, and organization: Admin Activity, Data Access, and System Event. AWS write audit log entries to these logs to help us answer the questions of "who did what, where, and when?" within your AWS Cloud resources.

Please refer to my blog for more details.

The author has extensive experience in Big Data Technologies and worked in the IT industry for over 25 years at various capacities after completing his BS and MS in computer science and data science respectively. He is certified cloud architect and holds several certifications from Microsoft and Google. Please contact him at srao@unifieddatascience.com if any questions.

Database types Realtime DB The database should be able to scale and keep up with the huge amounts of data that are coming in from streaming services like Kafka, IoT and so on. The SLA for latencies should be in milliseconds to very low seconds. The users also should be able to query the real time data and get millisecond or sub-second response times. Data Warehouse (Analytics) A data warehouse is specially designed for data analytics, which involves reading large amounts of data to understand relationships and trends across the data. The data is generally stored in denormalized form using Star or Snowflake schema. Data warehouse is used in a little broader scope, I would say we are trying to address Data Marts here which is a subset of the data warehouse and addresses a particular segment rather than addressing the whole enterprise. In this use case, the users not only query the real time data but also do some analytics, machine learning and reporting. OLAP OLAP is a kind of data structure where the data is stored in multi-dimensional cubes. The values (or measures) are stored at the intersection of the coordinates of all the dimensions.

This blog puts together Infrastructure and platform architecture for modern data lake. The following are taken into consideration while designing the architecture: Should be portable to any cloud and on-prem with minimal changes. Most of the technologies and processing will happen on Kubernetes so that it can be run on any Kubernetes cluster on any cloud or on-prem. All the technologies and processes use auto scaling features so that it will allocate and use resources minimally possible at any given time without compromising the end results. It will take advantage of spot instances and cost-effective features and technologies wherever possible to minimize the cost. It will use open-source technologies to save licensing costs. It will auto provision most of the technologies like Argo workflows, Spark, Jupyterhub (Dev environment for ML) and so on, which will minimize the use of the provider specific managed services. This will not only save money but also can be portable to any cloud or multi-cloud including on-prem. Concept The entire Infrastructure and Platform for modern data lakes and data platform consists of 3 main Parts at very higher level: Code Repository Compute Object store The main concept behind this design is “Work anywhere at any scale” with low cost and more efficiently. This design should work on any cloud like AWS, Azure or GCP and on on-premises. The entire infrastructure is reproducible on any cloud or on-premises platform and make it work with some minimal modifications to code. Below is the design diagram on how different parts interact with each other. The only pre-requisite to implement this is Kubernetes cluster and Object store.

Spark-On-Kubernetes is growing in adoption across the ML Platform and Data engineering. The goal of this blog is to create a multi-tenant Jupyter notebook server with built-in interactive Spark sessions support with Spark executors distributed as Kubernetes pods. Problem Statement Some of the disadvantages of using Hadoop (Big Data) clusters like Cloudera and EMR: Requires designing and build clusters which takes a lot of time and effort. Maintenance and support. Shared environment. Expensive as there are a lot of overheads like master nodes and so on. Not very flexible as different teams need different libraries. Different cloud technologies and on-premises come with different sets of big data implementations. Cannot be used for a large pool of users. Proposed solution The proposed solution contains 2 parts, which will work together to provide a complete solution. This will be implemented on Kubernetes so that it can work on any cloud or on-premises in the same fashion. I. Multi-tenant Jupyterhub JupyterHub allows users to interact with a computing environment through a webpage. As most devices have access to a web browser, JupyterHub makes it easy to provide and standardize the computing environment of a group of people (e.g., for a class of data scientists or an analytics team). This project will help us to set up our own JupyterHub on a cloud and leverage the cloud's scalable nature to support large groups of users. Thanks to Kubernetes, we are not tied to a specific cloud provider. II. Spark on Kubernetes (SPOK) Users can spin their own spark resources by creating sparkSession. Users can request several executors, cores per executor, memory per executor and driver memory along with other options. The Spark environment will be ready within a few seconds. Dynamic allocation will be used if none of those options are chosen. All the computes will be terminated if they’re idle for 30 minutes (or can be set by the user). The code will be saved to persistent storage and available when the user logs-in next time. Data Flow Diagram

Data Governance on cloud is a vast subject. It involves lot of things like security and IAM, Data cataloging, data discovery, data Lineage and auditing. Security Covers overall security and IAM, Encryption, Data Access controls and related stuff. Please visit my blog for detailed information and implementation on cloud. https://www.unifieddatascience.com/security-architecture-for-google-cloud-datalakes Data Cataloging and Metadata It revolves around various metadata including technical, business and data pipeline (ETL, dataflow) metadata. Please refer to my blog for detailed information and how to implement it on Cloud. https://www.unifieddatascience.com/data-cataloging-metadata-on-cloud Data Discovery It is part of the data cataloging which explained in the last section. Auditing It is important to audit is consuming and accessing the data stored in the data lakes, which is another critical part of the data governance. Data Lineage There is no tool that can capture data lineage at various levels. Some of the Data lineage can be tracked through data cataloging and other lineage information can be tracked through few dedicated columns within actual tables. Most of the Big Data databases support complex column type, it can be tracked easily without much complexity. The following are some examples of data lineage information that can be tracked through separate columns within each table wherever required. 1. Data last updated/created (add last updated and create timestamp to each row). 2. Who updated the data (data pipeline, job name, username and so on - Use Map or Struct or JSON column type)? 3. How data was modified or added (storing update history where required - Use Map or Struct or JSON column type). Data Quality and MDM Master data contains all of your business master data and can be stored in a separate dataset. This data will be shared among all other projects/datasets. This will help you to avoid duplicating master data thus reducing manageability. This will also provide a single source of truth so that different projects don't show different values for the same. As this data is very critical, we will follow type 2 slowly changing dimensional approach which will be explained my other blog in detail. https://www.unifieddatascience.com/data-modeling-techniques-for-modern-data-warehousing There are lot of MDM tools available to manage master data more appropriately but for moderate use cases, you can store this using database you are using. MDM also deals with central master data quality and how to maintain it during different life cycles of the master data. There are several data governance tools available in the market like Allation, Collibra, Informatica, Apache Atlas, Alteryx and so on. When it comes to Cloud, my experience is it’s better to use cloud native tools mentioned above should be suffice for data lakes on cloud/